Why Responsible AI Matters

As AI plays a larger role in our lives, identifying diseases and reviewing job applications, it raises challenging questions. People are becoming less focused on what AI is capable of and more on what it ought to do. This shift marks a significant transition from innovation to responsible utilization.

This is where the concept of responsible AI becomes relevant. It involves creating and utilizing these technologies in ways that honor individuals, maintain values, and generate more benefits than drawbacks.

As generative AI generates results that impact actual decisions, managing and accessing those results has become a crucial responsibility.

Responsible AI involves creating and utilizing AI systems that are ethical, fair, and accountable. It establishes a basis for trust, making sure that as AI develops, it remains focused on serving people rather than undermining principles.

In this article, we explore the concept of responsible AI, its key dimensions, the RAIL framework, and how to apply it in practice.

What is Responsible AI?

Responsible Artificial Intelligence (Responsible AI) is increasingly becoming essential in various industries, but with its capabilities comes a duty.

In the realm of AI, being "responsible" means making sure that AI systems are created, implemented, and utilized in ethical, transparent, and accountable manners.

Responsible AI is a method for creating, evaluating, and implementing AI systems in a safe, ethical, and trustworthy manner. As defined by the Organization for Economic Cooperation and Development (OECD), Responsible AI refers to AI that is innovative and trustworthy while upholding human rights and democratic principles.

AI systems are the result of numerous choices made by their developers. Responsible AI provides guidance for these choices, from establishing the system's purpose to how users interact with it, aiming for AI outputs that are more beneficial and fairer.

It prioritizes people and their objectives in the design process and honors values like fairness, reliability, and transparency.

This comprehensive definition of Responsible AI clarifies the Responsible AI Labs (RAIL) perspective to develop the RAIL Score that not only pushes technological boundaries but also honors individual rights and aligns with ethical norms.

At RAIL, we believe that the ethical development of AI should be considered at every stage of its lifecycle, which consists of three key phases:

RAIL Framework: Eight Dimensions for AI Output Evaluation

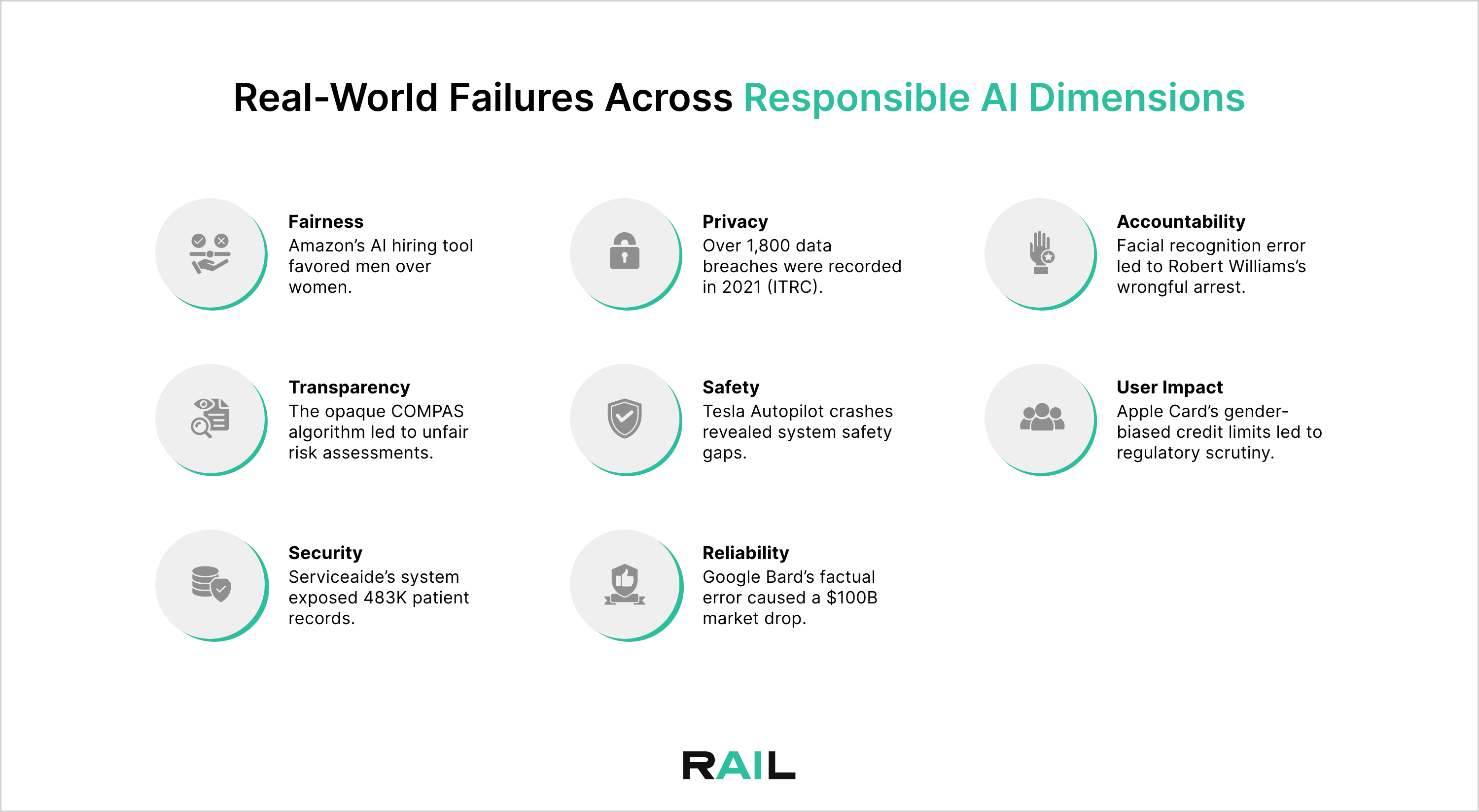

RAIL Framework emphasizes eight key aspects of Responsible AI that are crucial for making AI systems ethical, transparent, and trustworthy.

These aspects include Fairness, Safety, Privacy, Reliability, Security, Transparency, Accountability, and User Impact. Each aspect focuses on important areas in the creation, implementation, and oversight of AI systems to ensure they meet ethical standards and regulations.

Fairness

The fairness challenge of responsible AI highlights the necessity for AI systems to treat every individual or group equally, avoiding favoritism based on race, gender, or socioeconomic status.

This is a major issue, as there have been cases where AI algorithms have unintentionally reinforced existing inequalities. To overcome this challenge, organizations can adopt fairness metrics like equalized odds to make sure that the results of the algorithm do not unfairly impact specific groups.

Furthermore, organizations should guarantee that their data is diverse and representative of all groups and conduct comprehensive data analysis to uncover any biases or disparities within the dataset.

For example, as mentioned by Reuters, Amazon had to stop using its experimental AI recruiting tool after finding out it was biased against women. The algorithm, which was trained on past hiring data, started to prefer male candidates and penalized resumes with words like "women's," highlighting how biased data can result in unfair and discriminatory results.

Transparency

A major challenge in responsible AI is transparency; this means making sure that stakeholders can grasp how an algorithm makes its decisions.

This is important because it fosters transparency and builds trust in the system's results. To overcome this issue, organizations can use methods like feature selection, which identifies and clarifies the most important features for a specific task to stakeholders.

Besides, organizations should ensure that their algorithms produce interpretable results that are easy for people to understand.

For example, the "COMPAS" algorithm, utilized in the U.S. criminal justice system, has sparked significant debate due to its lack of transparency. This AI tool, intended to evaluate the chances of defendants reoffending, faced criticism when investigations showed that its risk scores were not understandable to judges or defendants. Additionally, a 2016 analysis by ProPublica discovered that COMPAS was more prone to misclassifying Black defendants at higher risk than their white counterparts.

Security

In sectors dealing with sensitive information, like healthcare and finance, privacy and security are essential. Strong data protection measures prevent unauthorized access and ensure adherence to regulations such as GDPR. This emphasis not only preserves user trust but also minimizes legal risks for companies.

For example, a lawsuit submitted in May 2025 claims that Serviceaide, an AI firm that provided systems to Catholic Health in New York, compromised the personal and medical information of approximately 483,126 patients from September to November 2024. The exposed data contained names, birth dates, Social Security numbers, medical record numbers, health insurance information, and additional details. The complaint asserts that Serviceaide did not encrypt sensitive data or remove unnecessary information, thereby breaching privacy and security responsibilities.

Privacy

AI systems frequently rely on extensive datasets, which may include sensitive personal information. This vulnerability exposes AI solutions to potential data breaches and attacks from malicious entities seeking to access this sensitive data.

For example, the Identity Theft Resource Center reports that in 2021, there were 1862 data breaches, marking a 23% increase from the previous record set in 2017. Data breaches lead to financial losses and harm the reputation of businesses, while also putting individuals at risk if their sensitive information is exposed.

Safety

This describes the system's ability to function without harming users or the environment. Safety in AI systems means avoiding physical harm, safeguarding data privacy, and ensuring protection against attacks or manipulations. It also includes the system's capacity to manage errors or failures smoothly without disastrous results.

For example, Tesla's Autopilot events emphasize the vital need for safety in AI systems. Multiple inquiries by the U.S. National Highway Traffic Safety Administration (NHTSA) have investigated accidents involving Tesla cars that were using driver-assistance features, where the AI system reportedly did not detect obstacles or respond to shifting conditions.

Reliability

This means the AI system must perform consistently over time and in different situations. It should work as expected and provide reliable, accurate results. Reliability also includes the system's capacity to manage unusual cases or rare scenarios that might not have been often seen in the training data.

For example, in 2023, Google's Bard, now known as Gemini, received backlash for giving false information during its demonstration, stating that the James Webb Space Telescope captured the first images of an exoplanet, which was incorrect. This mistake led to a decline of more than $100 billion in Alphabet's market value in just one day, sparking significant worries about the trustworthiness of AI-generated data.

Accountability

Accountability in Responsible AI means that those who design, develop, and deploy AI systems must be responsible for their functioning. It highlights that AI should not make critical decisions that impact people's lives alone and stresses the importance of human oversight.

To establish accountability, it is necessary to create industry standards and norms that direct the ethical use of AI technologies. This guarantees that human values and ethical principles guide AI operations, preventing the technology from operating unchecked.

For example, the wrongful arrest of Robert Williams in 2020, caused by a flawed facial recognition system, is one of the most referenced cases highlighting the necessity for accountability in AI. The Detroit Police Department used an AI facial recognition tool that incorrectly identified Williams, a Black man, as a robbery suspect, resulting in his arrest and public shame. After this event, the department updated its policies to guarantee better human oversight and clearer accountability for decisions made by AI.

User Impact

A suitable degree of transparency fosters trust among users and regulatory agencies. This guideline does not dictate which models to create and utilize, nor does it ban the use of "closed box" models.

The goal is to achieve a suitable level of transparency for each application and use case, allowing various users to comprehend and trust the results. Different situations and audiences necessitate distinct explanations. During the design process, it is important to determine which aspects of the solution require clarification, identify who needs those explanations, and define the most effective way to convey them.

Additionally, organizations should evaluate the reliability of their AI solutions and clearly communicate their intended purpose to ensure that users understand the system's role and limitations.

For example, in 2019, Apple's credit card, which was issued by Goldman Sachs, received criticism after users claimed that women were given much lower credit limits compared to men with similar financial profiles. The unclear workings of the algorithm and the company's failure to clarify how decisions were made resulted in accusations of gender bias and prompted an investigation by regulators.

Why Each Dimension Matters and How to Evaluate It

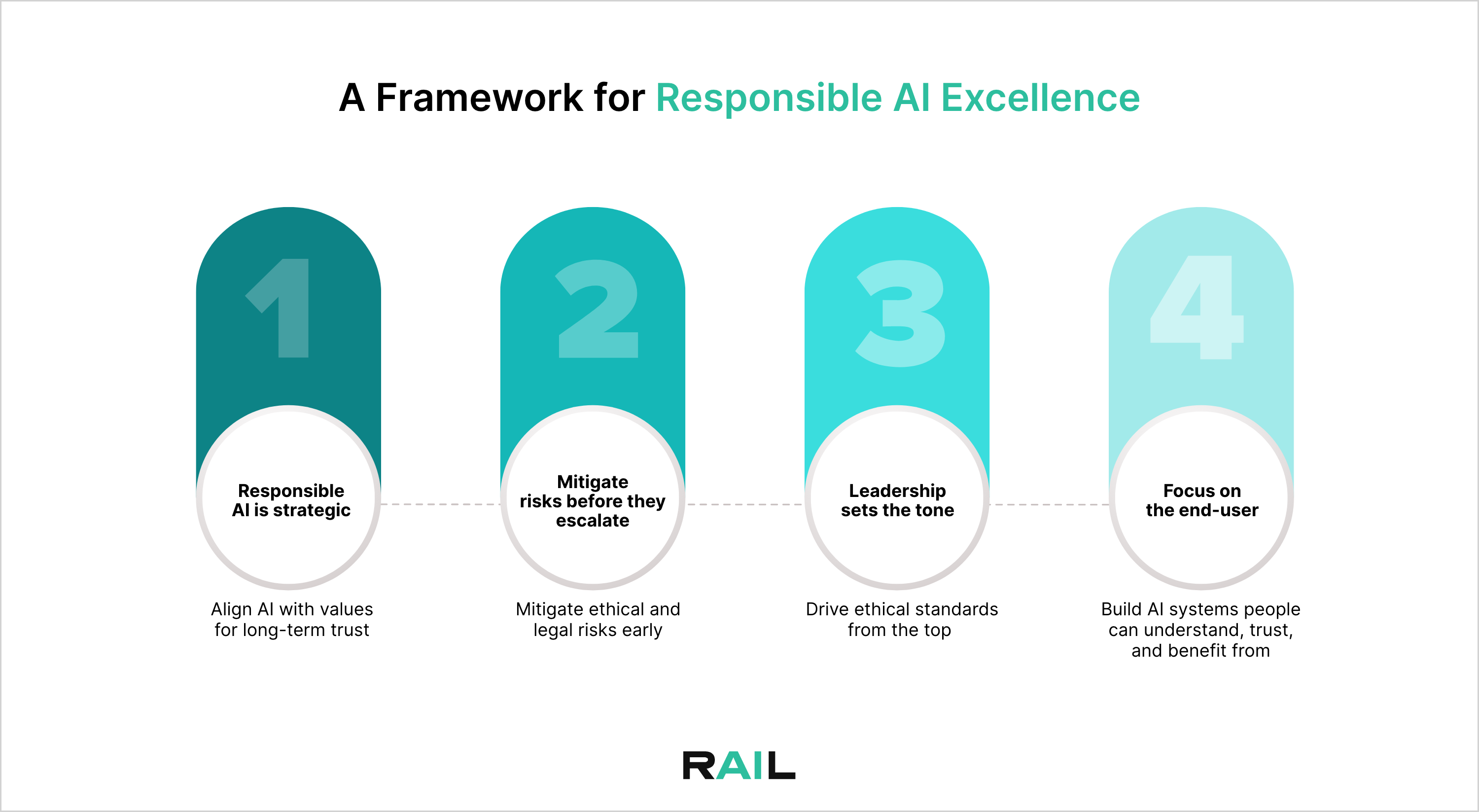

As Agentic AI becomes essential to how organizations function, the duty to use it wisely lies firmly with leadership. This goes beyond just avoiding negative publicity or meeting compliance requirements; it's about creating AI that embodies your values, safeguards your business, and builds enduring trust.

Applying RAIL in Practice

The RAIL Score is a tool to enhance your AI development. Each metric of Responsible AI Labs, such as identifying bias for Fairness, detecting harmful language for Safety, provides useful feedback.

By using it, you're actively stopping problems before they arise. With laws like the EU's AI Act, demonstrating that your AI is ethically responsible is essential for business.

Consider it like a fitness tracker for your AI. Just as you would track steps or calories to maintain your health, the RAIL Score monitors your AI's ethical status, helping you adjust it for improved performance.

Whether you're a developer fine-tuning a model or a business safeguarding your reputation, this tool keeps you in the lead.

RAIL Score is made to fit perfectly into your workflow, assessing and enhancing responses in real time. Here's how it functions:

Challenges and Future Trends

As AI becomes a core part of workflows and everyday life, it offers both unique opportunities and intricate challenges. What started with ChatGPT has now transformed into a system that is changing creativity, communication, and productivity.

Yet, as its use grows, several trends will shape the future:

In summary, 2026 signifies a shift from experimentation to integration, where success will hinge on how responsibly organizations navigate this change, balancing innovation with fairness, transparency, and human oversight.