The Scale of the Challenge

Sprout Social's 2025 data shows that more than 5.24 billion people worldwide now use social media. This scale highlights the role these platforms play in shaping how people communicate, share information, and form opinions online.

As social media platforms continue to evolve, an increasing number of people are utilizing them to create, share, and exchange content.

The internet was meant to unite online communities. It was envisioned as a vast, borderless realm where ideas could circulate freely; knowledge had no limits, and discussions ignited revolutions. In many respects, it has achieved this.

Yet, the reality of today's online spaces often tells a different story. Instead of healthy engagement and connection, platforms increasingly struggle with spam bots, harassment, misinformation, and the ongoing tension between free expression and user safety.

As online spaces grow louder and more complex, building safer digital communities demands AI that is transparent, accountable, and responsibly designed.

AI's Role in Changing Content Moderation

AI-based content moderation goes beyond basic keyword detection. It continuously learns from emerging trends, adapts to new forms of harmful behavior, and interprets the tone and context of comments rather than simply flagging offensive words or banning users.

At the core of this approach are several advanced technologies that allow AI to understand context, intent, and behavior more effectively.

However, issues such as bias and ethical concerns persist. As platforms develop, they work to find a balance between technological progress and human supervision. This balance is foundational for ensuring fair and effective content moderation, which is essential for establishing trust and creating safe online environments.

Why Content Moderation Matters for Safe Digital Spaces

Content moderation is vital for keeping online spaces safe. It protects users from harmful and offensive material. Safety is key to creating a community where individuals feel secure.

Trust is another important factor. Good moderation fosters confidence among users. When content is well-managed, users are more inclined to engage and take part. This involvement benefits both the platform and its community.

Beyond safety and trust, compliance forms the third pillar of effective content moderation. Laws and regulations often mandate that platforms manage content properly. Not following these can result in serious repercussions. Therefore, content moderation ensures legal compliance and maintains industry standards.

Key reasons why content moderation is important include:

The Challenge of AI Content Moderation

Let's be honest, online dialogues can be chaotic. We engage in humor, employ sarcasm, and convey emotions in ways that do not always align seamlessly with programming. While AI may identify "offensive" language, can it discern between a passionate discussion and deliberate harassment? Between robust critique and genuine threats? Between a lighthearted remark and a truly damaging one?

Context is everything. Yet, there are times when AI fails to grasp the humor.

We have already witnessed examples where automated content moderation has faltered:

Bias within AI is not merely a technical error; it mirrors the data upon which it is trained. This presents a significant issue.

Misjudged content, hidden bias, and lost context show why AI content moderation must be approached with care.

Human Moderators vs. Automated Solutions: Achieving the Optimal Balance

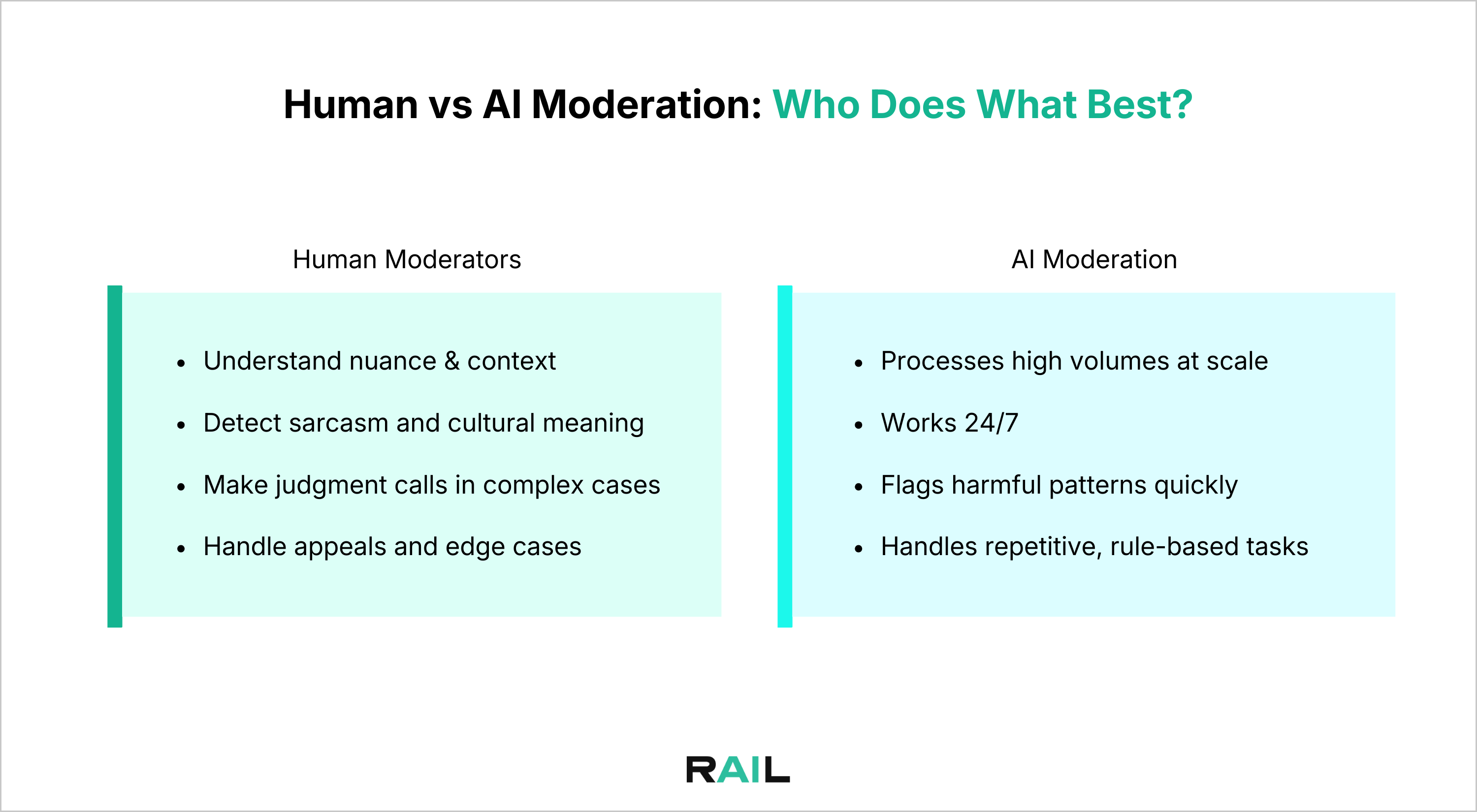

Striking a balance between human moderators and automated solutions is important as each method possesses distinct advantages and disadvantages. The integration of both approaches results in the most effective content moderation.

Human moderators are proficient in understanding context and subtlety. Their ability to judge in complex situations that automated systems might misunderstand. However, relying solely on human moderators can be demanding and inconsistent.

Automated solutions are good at quickly processing large amounts of content. They work non-stop and manage simple tasks very fast. However, they may overlook context-sensitive content that requires human insight.

The best approach combines a hybrid model. This method uses technology for efficiency while relying on human supervision for accuracy. The teamwork between machines and humans enhances both speed and decision-making.

Key elements of a well-rounded strategy include:

This balance ensures effective content moderation that is both comprehensive and detailed.

Implications for the Future of Online Communities

We are moving toward a digital landscape where artificial intelligence is no longer a passive element but actively shapes how we interact. The important questions are:

These questions highlight the delicate balance AI moderation must strike between protection and participation.

As user-generated content grows, reviewing it before publication becomes increasingly difficult.

AI-powered content moderation helps by protecting moderators, improving platform safety, and reducing manual effort. The most effective solution combines AI with human oversight.

But implementing this balance in practice requires the right framework, one that embeds responsibility, fairness, and transparency into AI systems from the start.

As online content expands and risks evolve, moderation cannot rely on guesswork. Evaluating AI-generated content across key dimensions — including safety, fairness, reliability, and transparency — helps teams identify risks before deployment.

Responsible AI technology can even trigger content regeneration when outputs fall below safety or fairness thresholds, helping ensure outputs align with organizational values and ethical standards.

Designed for both developers and content teams, responsible AI frameworks make it easier to integrate ethical practices into workflows without slowing innovation. With the right tools, organizations can move beyond reactive moderation toward proactive, measurable, responsible AI governance.